欢迎加入王导的VIP学习qq群:==>932194668<==

改造dubbo-demo-web项目为Tomcat启动项目

准备Tomcat的镜像底包

准备tomcat二进制包

运维主机HDSS7-200.host.com上:

Tomcat8下载链接

1 | [root@hdss7-200 src]# ls -l|grep tomcat |

简单配置tomcat

- 关闭AJP端口

1 | <!-- <Connector port="8009" protocol="AJP/1.3" redirectPort="8443" /> --> |

- 配置日志

- 删除3manager,4host-manager的handlers

1 | handlers = 1catalina.org.apache.juli.AsyncFileHandler, 2localhost.org.apache.juli.AsyncFileHandler,java.util.logging.ConsoleHandler |

- 日志级别改为INFO

1 | 1catalina.org.apache.juli.AsyncFileHandler.level = INFO |

- 注释掉所有关于3manager,4host-manager日志的配置

1 | #3manager.org.apache.juli.AsyncFileHandler.level = FINE |

准备Dockerfile

1 | From stanleyws/jre8:8u112 |

vi config.yml

1 | --- |

1 | wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar |

vi entrypoint.sh (不要忘了给执行权限)

1 | #!/bin/bash |

制作镜像并推送

1 | [root@hdss7-200 tomcat]# docker build . -t harbor.od.com/base/tomcat:v8.5.43 |

改造dubbo-demo-web项目

修改dubbo-client/pom.xml

1 | <packaging>war</packaging> |

修改Application.java

1 | import org.springframework.boot.autoconfigure.EnableAutoConfiguration; |

创建ServletInitializer.java

1 | package com.od.dubbotest; |

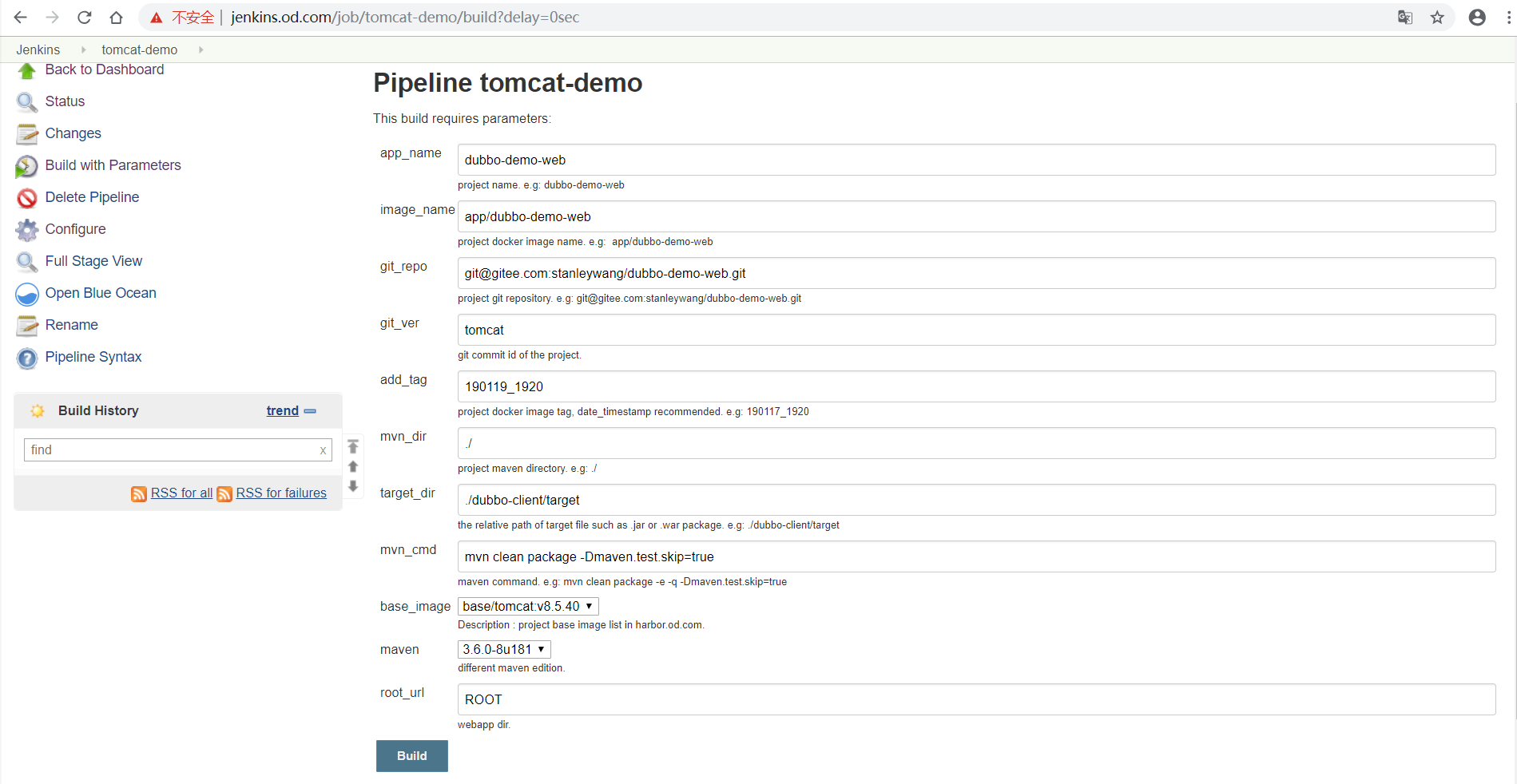

新建Jenkins的pipeline

配置New job

使用admin登录

New Item

create new jobs

Enter an item name

tomcat-demo

Pipeline -> OK

Discard old builds

Days to keep builds : 3

Max # of builds to keep : 30This project is parameterized

Add Parameter -> String Parameter

Name : app_name

Default Value :

Description : project name. e.g: dubbo-demo-webAdd Parameter -> String Parameter

Name : image_name

Default Value :

Description : project docker image name. e.g: app/dubbo-demo-webAdd Parameter -> String Parameter

Name : git_repo

Default Value :

Description : project git repository. e.g: git@gitee.com:stanleywang/dubbo-demo-web.gitAdd Parameter -> String Parameter

Name : git_ver

Default Value : tomcat

Description : git commit id of the project.Add Parameter -> String Parameter

Name : add_tag

Default Value :

Description : project docker image tag, date_timestamp recommended. e.g: 190117_1920Add Parameter -> String Parameter

Name : mvn_dir

Default Value : ./

Description : project maven directory. e.g: ./Add Parameter -> String Parameter

Name : target_dir

Default Value : ./dubbo-client/target

Description : the relative path of target file such as .jar or .war package. e.g: ./dubbo-client/targetAdd Parameter -> String Parameter

Name : mvn_cmd

Default Value : mvn clean package -Dmaven.test.skip=true

Description : maven command. e.g: mvn clean package -e -q -Dmaven.test.skip=trueAdd Parameter -> Choice Parameter

Name : base_image

Default Value :- base/tomcat:v7.0.94

- base/tomcat:v8.5.43

- base/tomcat:v9.0.17

Description : project base image list in harbor.od.com.

Add Parameter -> Choice Parameter

Name : maven

Default Value :- 3.6.0-8u181

- 3.2.5-6u025

- 2.2.1-6u025

Description : different maven edition.

Add Parameter -> String Parameter

Name : root_url

Default Value : ROOT

Description : webapp dir.

Pipeline Script

1 | pipeline { |

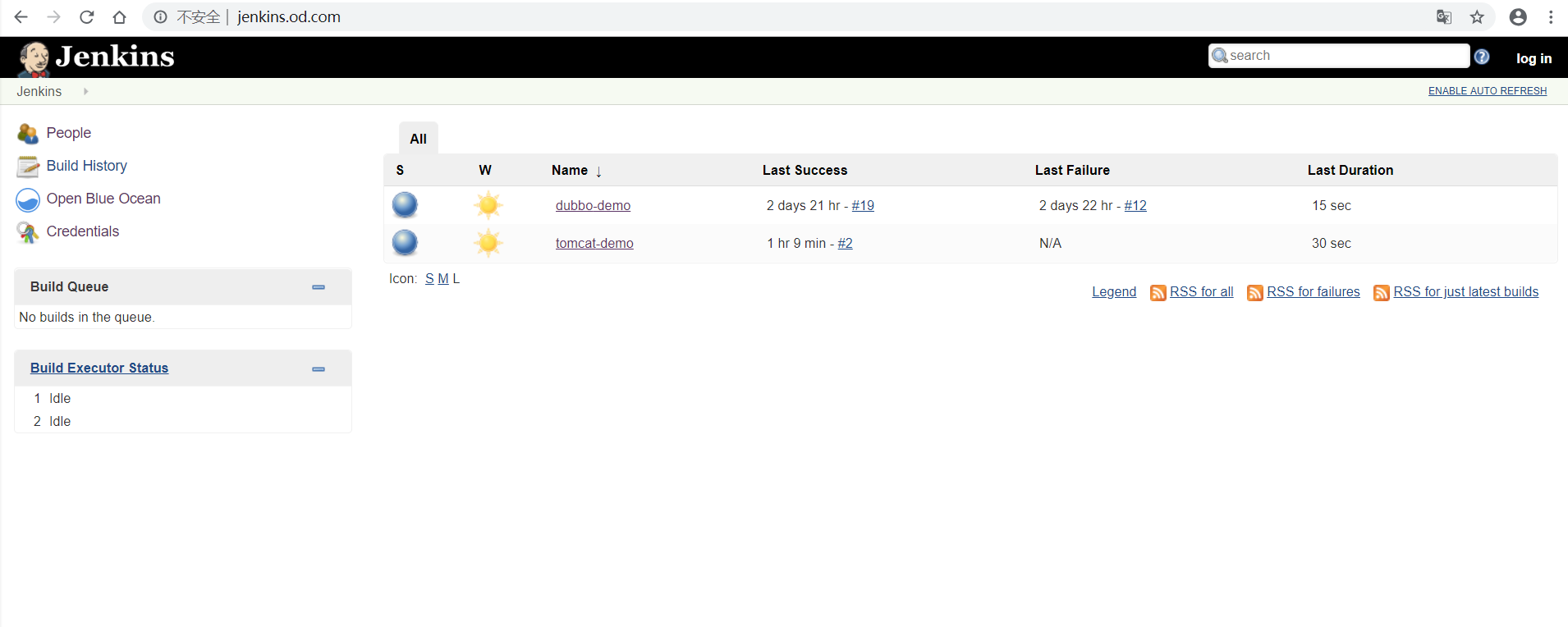

构建应用镜像

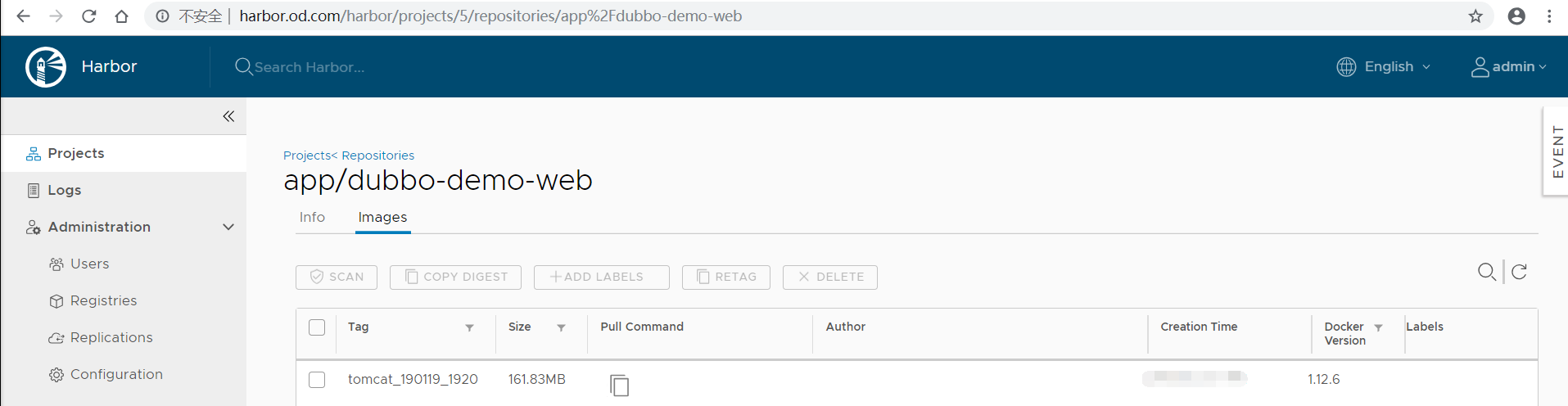

使用Jenkins进行CI,并查看harbor仓库

准备k8s的资源配置清单

不再需要单独准备资源配置清单

应用资源配置清单

k8s的dashboard上直接修改image的值为jenkins打包出来的镜像

文档里的例子是:harbor.od.com/app/dubbo-demo-web:tomcat_190119_1920

浏览器访问

http://demo.od.com?hello=wangdao

检查tomcat运行情况

任意一台运算节点主机上:

1 | [root@hdss7-22 ~]# kubectl get pods -n app |

使用Prometheus和Grafana监控kubernetes集群

部署kube-state-metrics

运维主机HDSS7-200.host.com

准备kube-state-metrics镜像

1 | [root@hdss7-200 ~]# docker pull quay.io/coreos/kube-state-metrics:v1.5.0 |

准备资源配置清单

vi /data/k8s-yaml/kube-state-metrics/rbac.yaml

1 | apiVersion: v1 |

vi /data/k8s-yaml/kube-state-metrics/deployment.yaml

1 | apiVersion: extensions/v1beta1 |

应用资源配置清单

任意一台运算节点上:

1 | [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/kube-state-metrics/rbac.yaml |

检查启动情况

1 | [root@hdss7-21 ~]# kubectl get pods -n kube-system|grep kube-state-metrics |

部署node-exporter

运维主机HDSS7-200.host.com上:

准备node-exporter镜像

node-exporter官方dockerhub地址

node-expoerer官方github地址

1 | [root@hdss7-200 ~]# docker pull prom/node-exporter:v0.15.0 |

准备资源配置清单

1 | kind: DaemonSet |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/node-exporter/node-exporter-ds.yaml |

部署cadvisor

运维主机HDSS7-200.host.com上:

准备cadvisor镜像

cadvisor官方dockerhub地址

cadvisor官方github地址

1 | [root@hdss7-200 ~]# docker pull google/cadvisor:v0.28.3 |

准备资源配置清单

vi /data/k8s-yaml/cadvisor/daemonset.yaml

1 | apiVersion: apps/v1 |

修改运算节点软连接

所有运算节点上:

1 | [root@hdss7-21 ~]# mount -o remount,rw /sys/fs/cgroup/ |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/cadvisor/deamon.yaml |

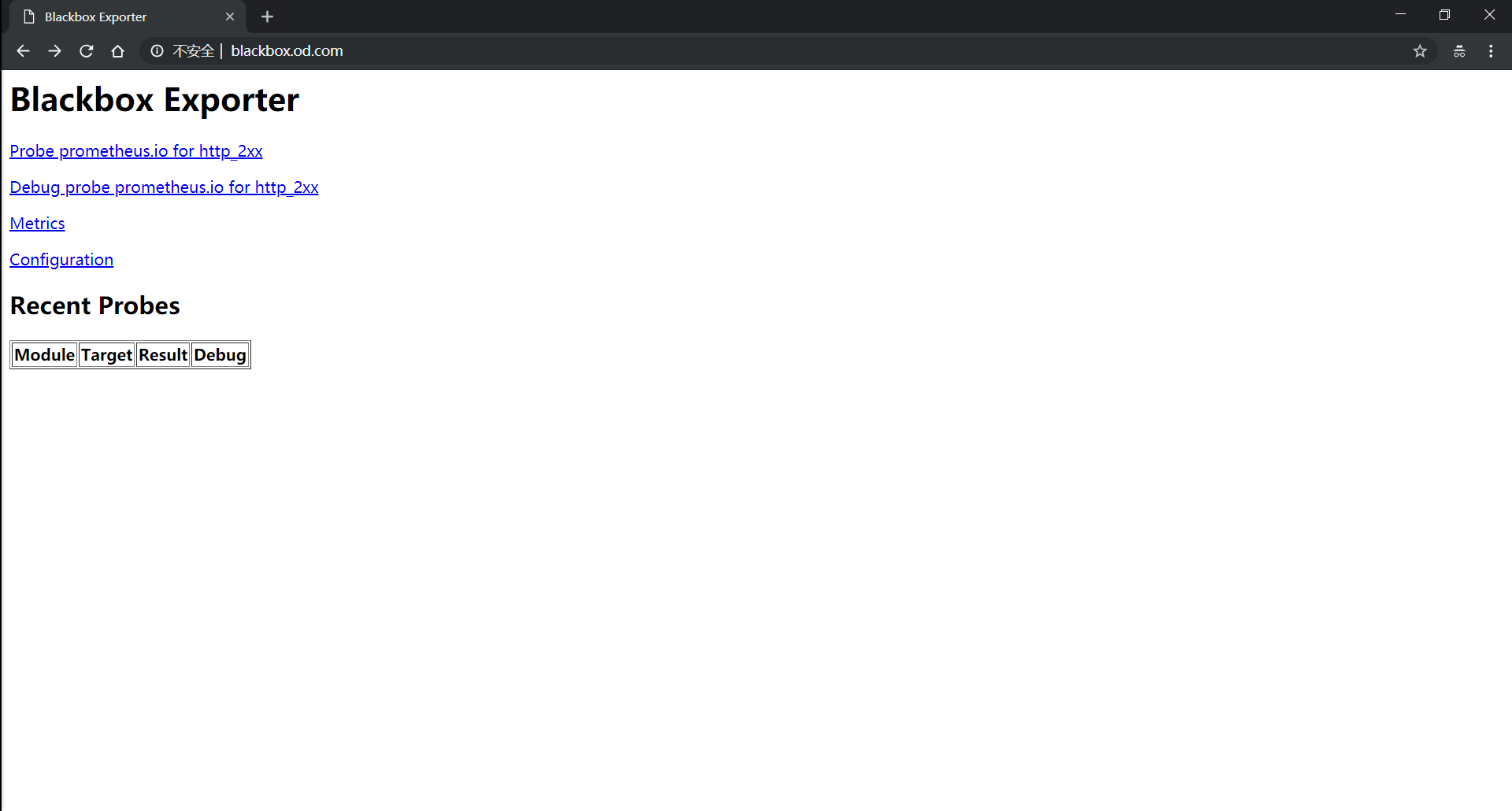

部署blackbox-exporter

运维主机HDSS7-200.host.com上:

准备blackbox-exporter镜像

blackbox-exporter官方dockerhub地址

blackbox-exporter官方github地址

1 | [root@hdss7-200 ~]# docker pull prom/blackbox-exporter:v0.14.0 |

准备资源配置清单

vi /data/k8s-yaml/blackbox-exporter/configmap.yaml

1 | apiVersion: v1 |

vi /data/k8s-yaml/blackbox-exporter/deployment.yaml

1 | kind: Deployment |

vi /data/k8s-yaml/blackbox-exporter/service.yaml

1 | kind: Service |

vi /data/k8s-yaml/blackbox-exporter/ingress.yaml

1 | apiVersion: extensions/v1beta1 |

解析域名

HDSS7-11.host.com上

1 | blackbox 60 IN A 10.4.7.10 |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl -f http://k8s-yaml.od.com/blackbox-exporter/configmap.yaml |

浏览器访问

部署prometheus

运维主机HDSS7-200.host.com上:

准备prometheus镜像

prometheus官方dockerhub地址

prometheus官方github地址

1 | [root@hdss7-200 ~]# docker pull prom/prometheus:v2.9.1 |

准备资源配置清单

运维主机HDSS7-200.host.com上:

1 | [root@hdss7-200 k8s-yaml]# mkdir /data/k8s-yaml/prometheus && mkdir -p /data/nfs-volume/prometheus/etc && cd /data/k8s-yaml/prometheus |

vi /data/k8s-yaml/prometheus/rbac.yaml

1 | apiVersion: v1 |

vi /data/k8s-yaml/prometheus/deployment.yaml

1 | apiVersion: extensions/v1beta1 |

vi /data/k8s-yaml/prometheus/service.yaml

1 | apiVersion: v1 |

vi /data/k8s-yaml/prometheus/ingress.yaml

1 | apiVersion: extensions/v1beta1 |

准备prometheus的配置文件

运算节点HDSS7-21.host.com上:

- 拷贝证书

1 | [root@hdss7-21 ~]# mkdir -pv /data/nfs-volume/prometheus/{etc,prom-db} |

- 准备配置

1 | global: |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/prometheus/rbac.yaml |

解析域名

HDSS7-11.host.com上

1 | prometheus 60 IN A 10.4.7.10 |

浏览器访问

Prometheus监控内容

Targets(jobs)

etcd

监控etcd服务

| key | value |

|---|---|

| etcd_server_has_leader | 1 |

| etcd_http_failed_total | 1 |

| … | … |

kubernetes-apiserver

监控apiserver服务

kubernetes-kubelet

监控kubelet服务

kubernetes-kube-state

监控基本信息

node-exporter

监控Node节点信息

kube-state-metrics

监控pod信息

traefik

监控traefik-ingress-controller

| key | value |

|---|---|

| traefik_entrypoint_requests_total{code=”200”,entrypoint=”http”,method=”PUT”,protocol=”http”} | 138 |

| traefik_entrypoint_requests_total{code=”200”,entrypoint=”http”,method=”GET”,protocol=”http”} | 285 |

| traefik_entrypoint_open_connections{entrypoint=”http”,method=”PUT”,protocol=”http”} | 1 |

| … | … |

注意:在traefik的pod控制器上加annotations,并重启pod,监控生效

配置范例:

1 | "annotations": { |

blackbox*

监控服务是否存活

- blackbox_tcp_pod_porbe

监控tcp协议服务是否存活

| key | value |

|---|---|

| probe_success | 1 |

| probe_ip_protocol | 4 |

| probe_failed_due_to_regex | 0 |

| probe_duration_seconds | 0.000597546 |

| probe_dns_lookup_time_seconds | 0.00010898 |

注意:在pod控制器上加annotations,并重启pod,监控生效

配置范例:

1 | "annotations": { |

- blackbox_http_pod_probe

监控http协议服务是否存活

| key | value |

|---|---|

| probe_success | 1 |

| probe_ip_protocol | 4 |

| probe_http_version | 1.1 |

| probe_http_status_code | 200 |

| probe_http_ssl | 0 |

| probe_http_redirects | 1 |

| probe_http_last_modified_timestamp_seconds | 1.553861888e+09 |

| probe_http_duration_seconds{phase=”transfer”} | 0.000238343 |

| probe_http_duration_seconds{phase=”tls”} | 0 |

| probe_http_duration_seconds{phase=”resolve”} | 5.4095e-05 |

| probe_http_duration_seconds{phase=”processing”} | 0.000966104 |

| probe_http_duration_seconds{phase=”connect”} | 0.000520821 |

| probe_http_content_length | 716 |

| probe_failed_due_to_regex | 0 |

| probe_duration_seconds | 0.00272609 |

| probe_dns_lookup_time_seconds | 5.4095e-05 |

注意:在pod控制器上加annotations,并重启pod,监控生效

配置范例:

1 | "annotations": { |

kubernetes-pods*

监控JVM信息

| key | value |

|---|---|

| jvm_info{version=”1.7.0_80-b15”,vendor=”Oracle Corporation”,runtime=”Java(TM) SE Runtime Environment”,} | 1.0 |

| jmx_config_reload_success_total | 0.0 |

| process_resident_memory_bytes | 4.693897216E9 |

| process_virtual_memory_bytes | 1.2138840064E10 |

| process_max_fds | 65536.0 |

| process_open_fds | 123.0 |

| process_start_time_seconds | 1.54331073249E9 |

| process_cpu_seconds_total | 196465.74 |

| jvm_buffer_pool_used_buffers{pool=”mapped”,} | 0.0 |

| jvm_buffer_pool_used_buffers{pool=”direct”,} | 150.0 |

| jvm_buffer_pool_capacity_bytes{pool=”mapped”,} | 0.0 |

| jvm_buffer_pool_capacity_bytes{pool=”direct”,} | 6216688.0 |

| jvm_buffer_pool_used_bytes{pool=”mapped”,} | 0.0 |

| jvm_buffer_pool_used_bytes{pool=”direct”,} | 6216688.0 |

| jvm_gc_collection_seconds_sum{gc=”PS MarkSweep”,} | 1.867 |

| … | … |

注意:在pod控制器上加annotations,并重启pod,监控生效

配置范例:

1 | "annotations": { |

修改traefik服务接入prometheus监控

dashboard上:

kube-system名称空间->daemonset->traefik-ingress-controller->spec->template->metadata下,添加

1 | "annotations": { |

删除pod,重启traefik,观察监控

继续添加blackbox监控配置项

1 | "annotations": { |

修改dubbo-service服务接入prometheus监控

dashboard上:

app名称空间->deployment->dubbo-demo-service->spec->template=>metadata下,添加

1 | "annotations": { |

删除pod,重启traefik,观察监控

修改dubbo-consumer服务接入prometheus监控

app名称空间->deployment->dubbo-demo-consumer->spec->template->metadata下,添加

1 | "annotations": { |

删除pod,重启traefik,观察监控

部署Grafana

运维主机HDSS7-200.host.com上:

准备grafana镜像

grafana官方dockerhub地址

grafana官方github地址

grafana官网

1 | [root@hdss7-200 ~]# docker pull grafana/grafana:6.1.4 |

准备资源配置清单

vi /data/k8s-yaml/grafana/rbac.yaml

1 | apiVersion: rbac.authorization.k8s.io/v1 |

vi /data/k8s-yaml/grafana/deployment.yaml

1 | apiVersion: extensions/v1beta1 |

vi /data/k8s-yaml/grafana/service.yaml

1 | apiVersion: v1 |

vi /data/k8s-yaml/grafana/ingress.yaml

1 | apiVersion: extensions/v1beta1 |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl apply -f http://k8s-yaml.od.com/grafana/deployment.yaml |

解析域名

HDSS7-11.host.com上

1 | grafana 60 IN A 10.4.7.10 |

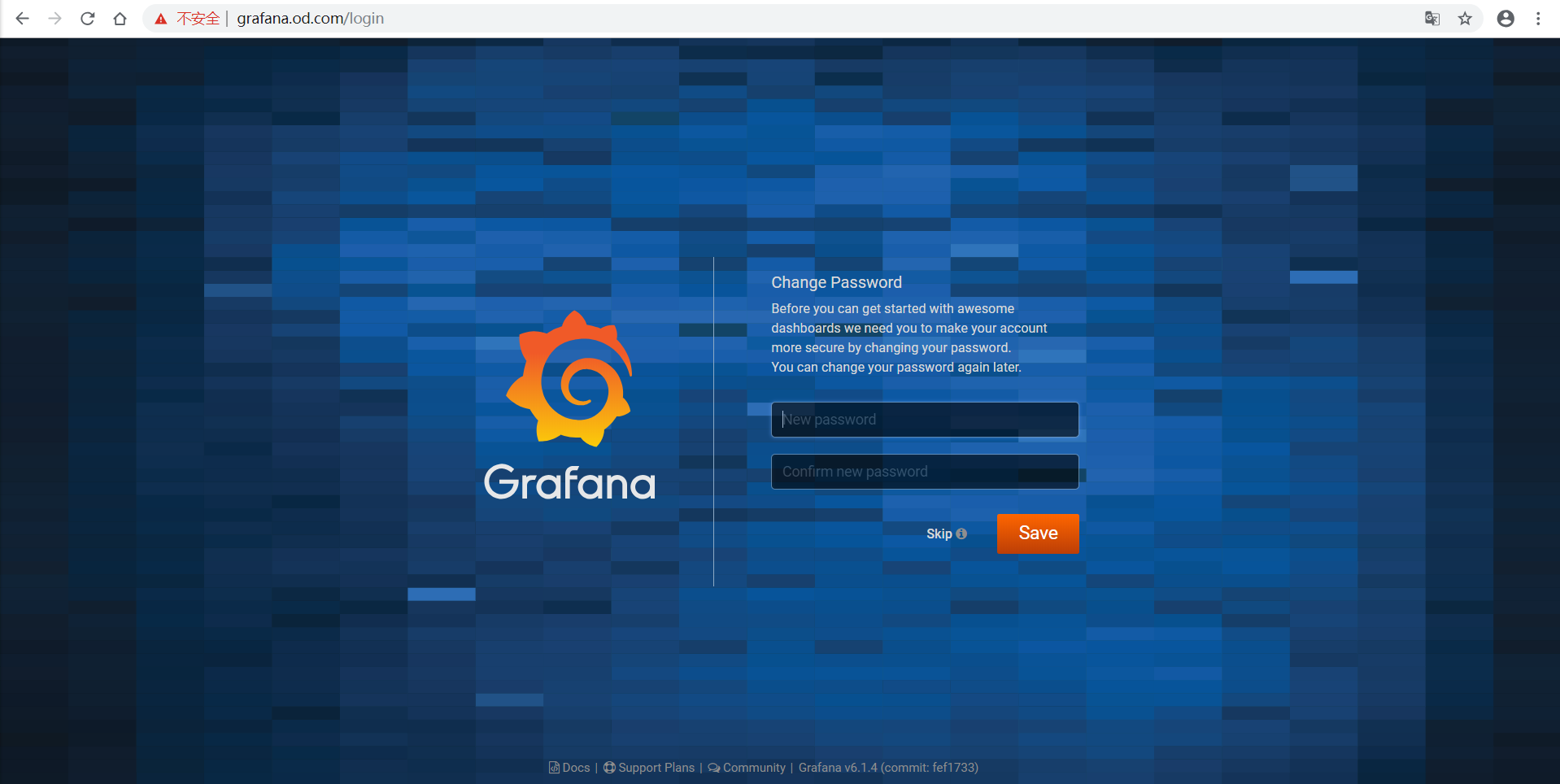

浏览器访问

- 用户名:admin

- 密 码:admin

登录后需要修改管理员密码

配置grafana页面

外观

Configuration -> Preferences

UI Theme

Light

Home Dashboard

Default

Timezone

Local browser time

save

插件

Configuration -> Plugins

- Kubernetes App

安装方法一:

1 | grafana-cli plugins install grafana-kubernetes-app |

安装方法二:

下载地址

1 | [root@hdss7-21 plugins]# wget https://grafana.com/api/plugins/grafana-kubernetes-app/versions/1.0.1/download -O grafana-kubernetes-app.zip |

- Clock Pannel

安装方法一:

1 | grafana-cli plugins install grafana-clock-panel |

安装方法二:

下载地址

- Pie Chart

安装方法一:

1 | grafana-cli plugins install grafana-piechart-panel |

安装方法二:

下载地址

- D3 Gauge

安装方法一:

1 | grafana-cli plugins install briangann-gauge-panel |

安装方法二:

下载地址

- Discrete

安装方法一:

1 | grafana-cli plugins install natel-discrete-panel |

安装方法二:

下载地址

重启grafana的pod

依次enable插件

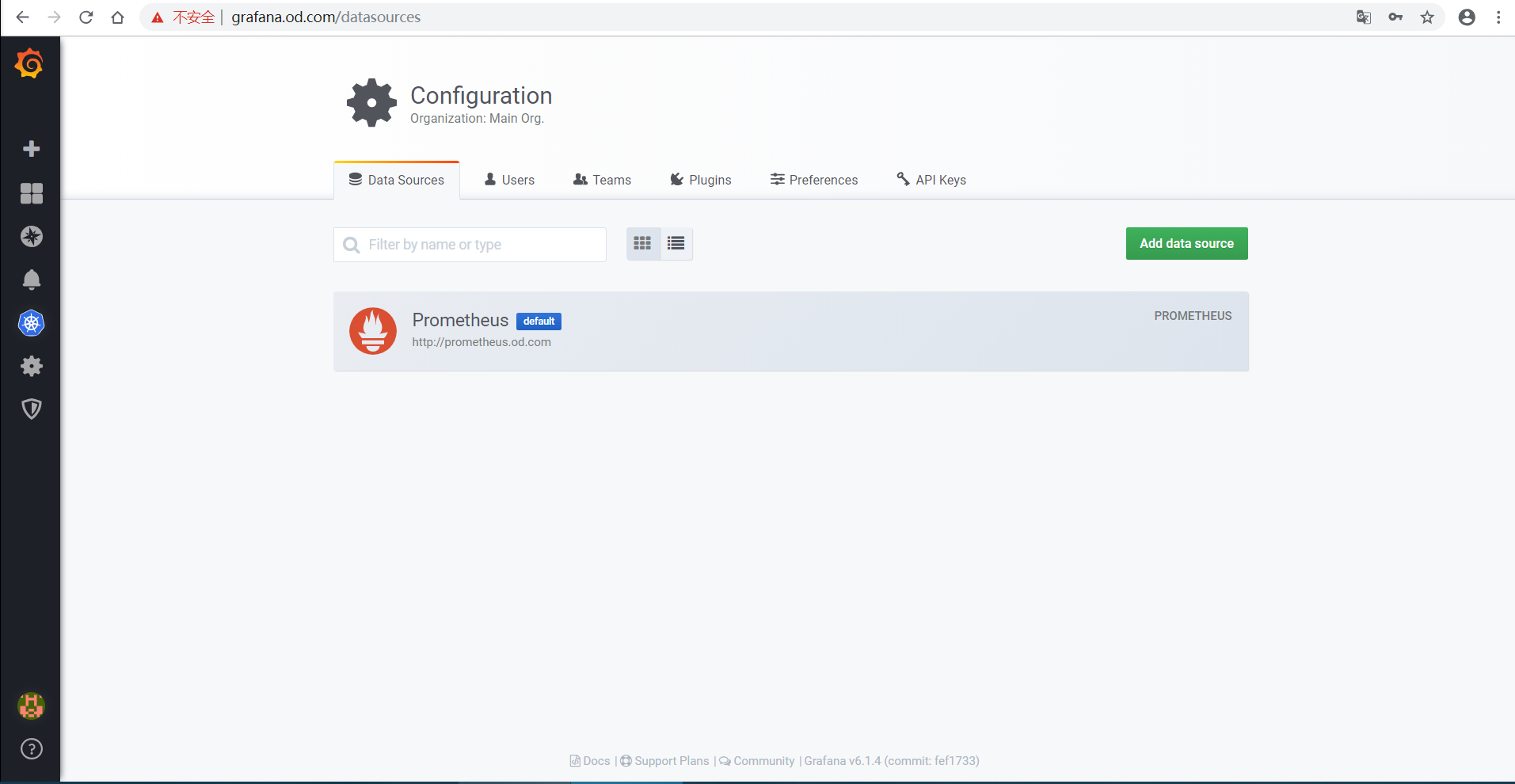

配置grafana数据源

Configuration -> Data Sources

选择prometheus

- HTTP

| key | value |

|---|---|

| URL | http://prometheus.od.com |

| Access | Server(Default) |

- Save & Test

配置Kubernetes集群Dashboard

kubernetes -> +New Cluster

- Add a new cluster

| key | value |

|---|---|

| Name | myk8s |

- HTTP

| key | value |

|---|---|

| URL | https://10.4.7.10:7443 |

| Access | Server(Default) |

- Auth

| key | value |

|---|---|

| TLS Client Auth | 勾选 |

| With Ca Cert | 勾选 |

将ca.pem、client.pem和client-key.pem粘贴至文本框内

- Prometheus Read

| key | value |

|---|---|

| Datasource | Prometheus |

- Save

注意:

- K8S Container中,所有Pannel的

pod_name -> container_label_io_kubernetes_pod_name

见附件

见附件

见附件

见附件

配置自定义dashboard

根据Prometheus数据源里的数据,配置如下dashboard:

- etcd dashboard

- traefik dashboard

- generic dashboard

- JMX dashboard

- blackbox dashboard

见附件

见附件

见附件

见附件

见附件

使用ELK Stack收集kubernetes集群内的应用日志

部署ElasticSearch

官网

官方github地址

下载地址HDSS7-12.host.com上:

安装

1 | [root@hdss7-12 src]# ls -l|grep elasticsearch-6.8.1.tar.gz |

配置

elasticsearch.yml

1 | [root@hdss7-12 src]# mkdir -p /data/elasticsearch/{data,logs} |

jvm.options

1 | [root@hdss7-12 elasticsearch]# vi config/jvm.options |

创建普通用户

1 | [root@hdss7-12 elasticsearch]# useradd -s /bin/bash -M es |

文件描述符

1 | es hard nofile 65536 |

调整内核参数

1 | [root@hdss7-12 elasticsearch]# sysctl -w vm.max_map_count=262144 |

启动

1 | [root@hdss7-12 elasticsearch]# su - es |

调整ES日志模板

1 | [root@hdss7-12 elasticsearch]# curl -H "Content-Type:application/json" -XPUT http://10.4.7.12:9200/_template/k8s -d '{ |

部署kafka

官网

官方github地址

下载地址HDSS7-11.host.com上:

安装

1 | [root@hdss7-11 src]# ls -l|grep kafka |

配置

1 | log.dirs=/data/kafka/logs |

启动

1 | [root@hdss7-11 kafka]# bin/kafka-server-start.sh -daemon config/server.properties |

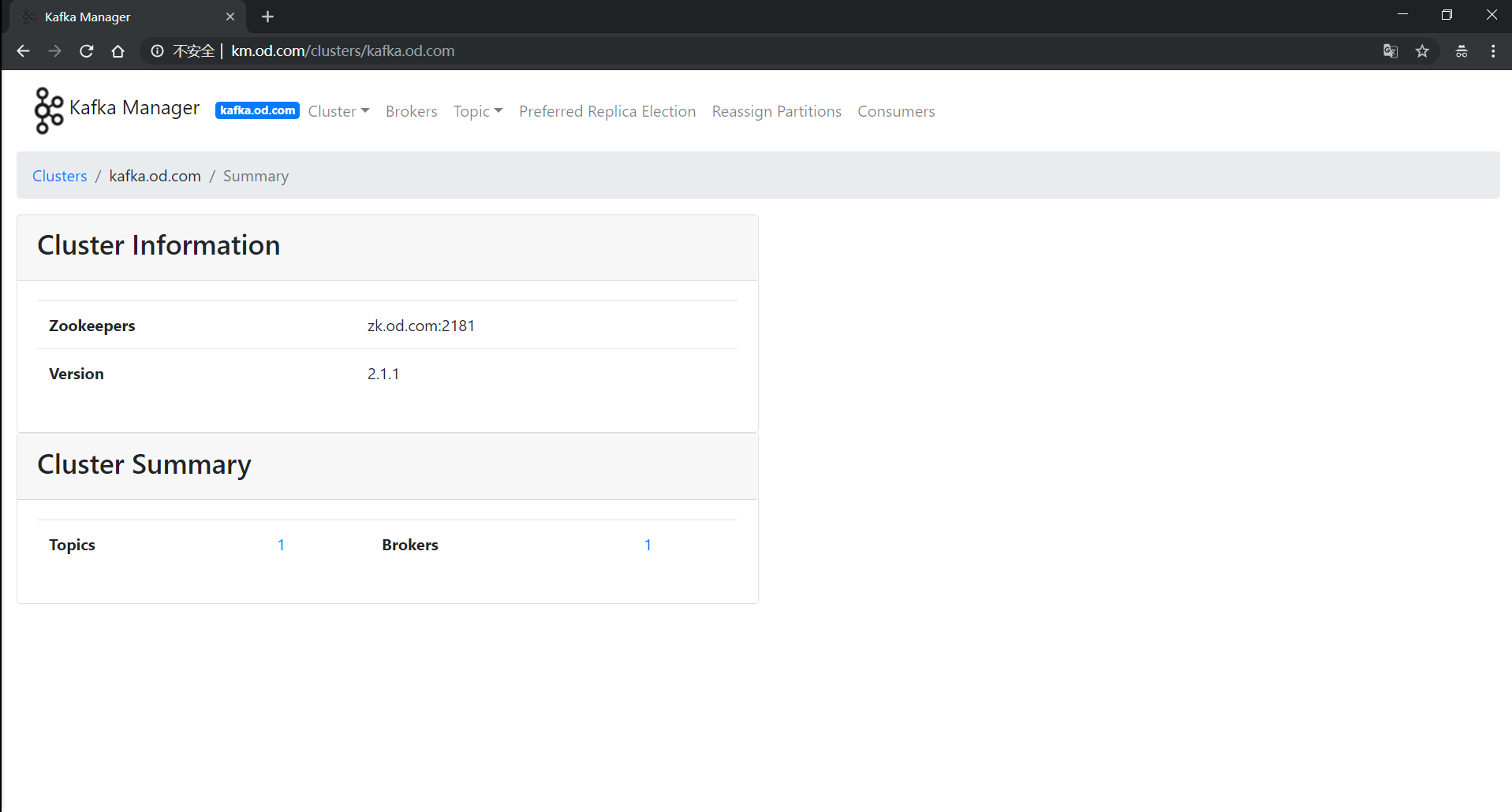

部署kafka-manager

官方github地址

源码下载地址

运维主机HDSS7-200.host.com上:

方法一:1、准备Dockerfile

1 | FROM hseeberger/scala-sbt |

方法一:2、制作docker镜像

1 | [root@hdss7-200 kafka-manager]# docker build . -t harbor.od.com/infra/kafka-manager:v2.0.0.2 |

方法二:直接下载docker镜像

1 | [root@hdss7-200 ~]# docker pull sheepkiller/kafka-manager:stable |

准备资源配置清单

vi /data/k8s-yaml/kafka-manager/deployment.yaml

1 | kind: Deployment |

vi /data/k8s-yaml/kafka-manager/svc.yaml

1 | kind: Service |

vi /data/k8s-yaml/kafka-manager/ingress.yaml

1 | kind: Ingress |

应用资源配置清单

任意一台运算节点上:

1 | [root@hdss7-21 kafka-manager]# kubectl apply -f http://k8s-yaml.od.com/kafka-manager/deployment.yaml |

解析域名

HDSS7-11.host.com上

1 | km 60 IN A 10.4.7.10 |

浏览器访问

部署filebeat

官方下载地址

运维主机HDSS7-200.host.com上:

制作docker镜像

准备Dockerfile

vi /data/dockerfile/filebeat/Dockerfile

1 | FROM debian:jessie |

vi /data/dockerfile/filebeat/docker-entrypoint.sh

1 | #!/bin/bash |

制作镜像

1 | [root@hdss7-200 filebeat]# docker build . -t harbor.od.com/infra/filebeat:v7.2.0 |

修改资源配置清单

使用dubbo-demo-consumer的Tomcat版镜像

1 | kind: Deployment |

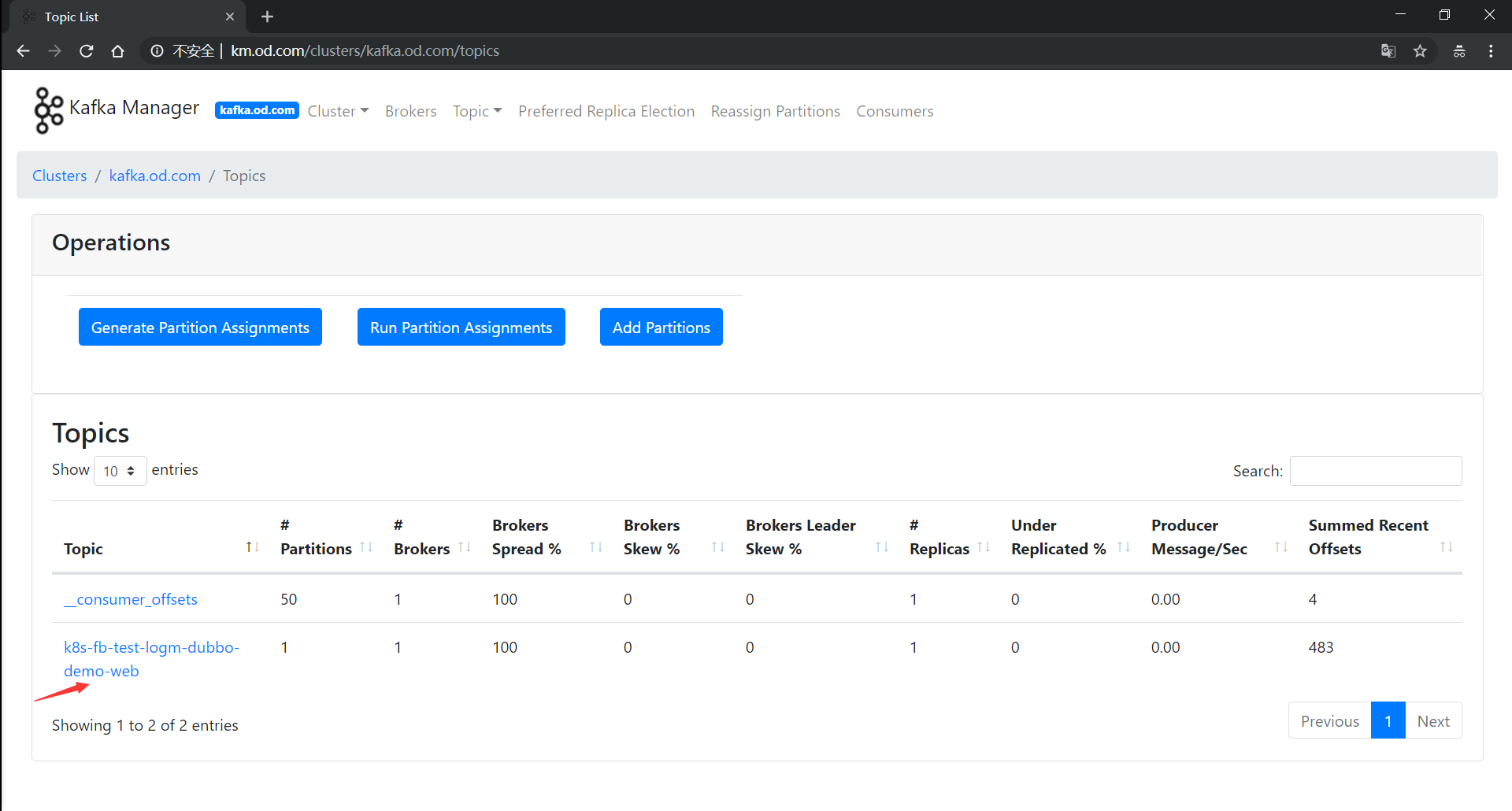

浏览器访问http://km.od.com

看到kafaka-manager里,topic打进来,即为成功。

验证数据

1 | ./kafka-console-consumer.sh --bootstrap-server 10.4.7.11:9092 --topic k8s-fb-test-logm-dubbo-demo-web --from-beginning |

部署logstash

运维主机HDSS7-200.host.com上:

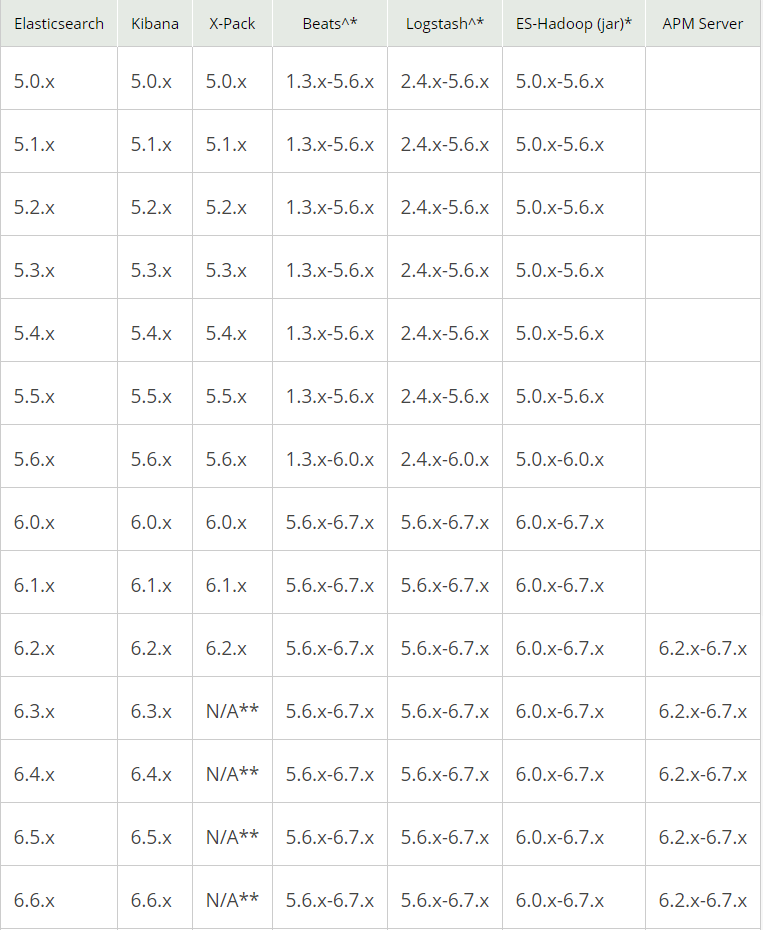

选版本

准备docker镜像

- 下载官方镜像

1 | [root@hdss7-200 ~]# docker pull logstash:6.7.2 |

- 自定义Dockerfile

1 | From harbor.od.com/public/logstash:v6.7.2 |

1 | http.host: "0.0.0.0" |

- 创建自定义镜像

1 | [root@hdss7-200 logstash]# docker build . -t harbor.od.com/infra/logstash:v6.7.2 |

启动docker镜像

- 创建配置

1 | input { |

- 启动logstash镜像

1 | [root@hdss7-200 ~]# docker run -d --name logstash-test -v /etc/logstash:/etc/logstash harbor.od.com/infra/logstash:v6.7.2 -f /etc/logstash/logstash-test.conf |

- 验证ElasticSearch里的索引

1 | [root@hdss7-200 ~]# curl http://10.4.7.12:9200/_cat/indices?v |

部署Kibana

运维主机HDSS7-200.host.com上:

准备docker镜像

1 | [root@hdss7-200 ~]# docker pull kibana:6.8.1 |

解析域名

HDSS7-11.host.com上

1 | kibana 60 IN A 10.4.7.10 |

准备资源配置清单

vi /data/k8s-yaml/kibana/deployment.yaml

1 | kind: Deployment |

vi /data/k8s-yaml/kibana/svc.yaml

1 | kind: Service |

vi /data/k8s-yaml/kibana/ingress.yaml

1 | kind: Ingress |

应用资源配置清单

任意运算节点上:

1 | [root@hdss7-21 ~]# kubectl apply -f deployment.yaml |

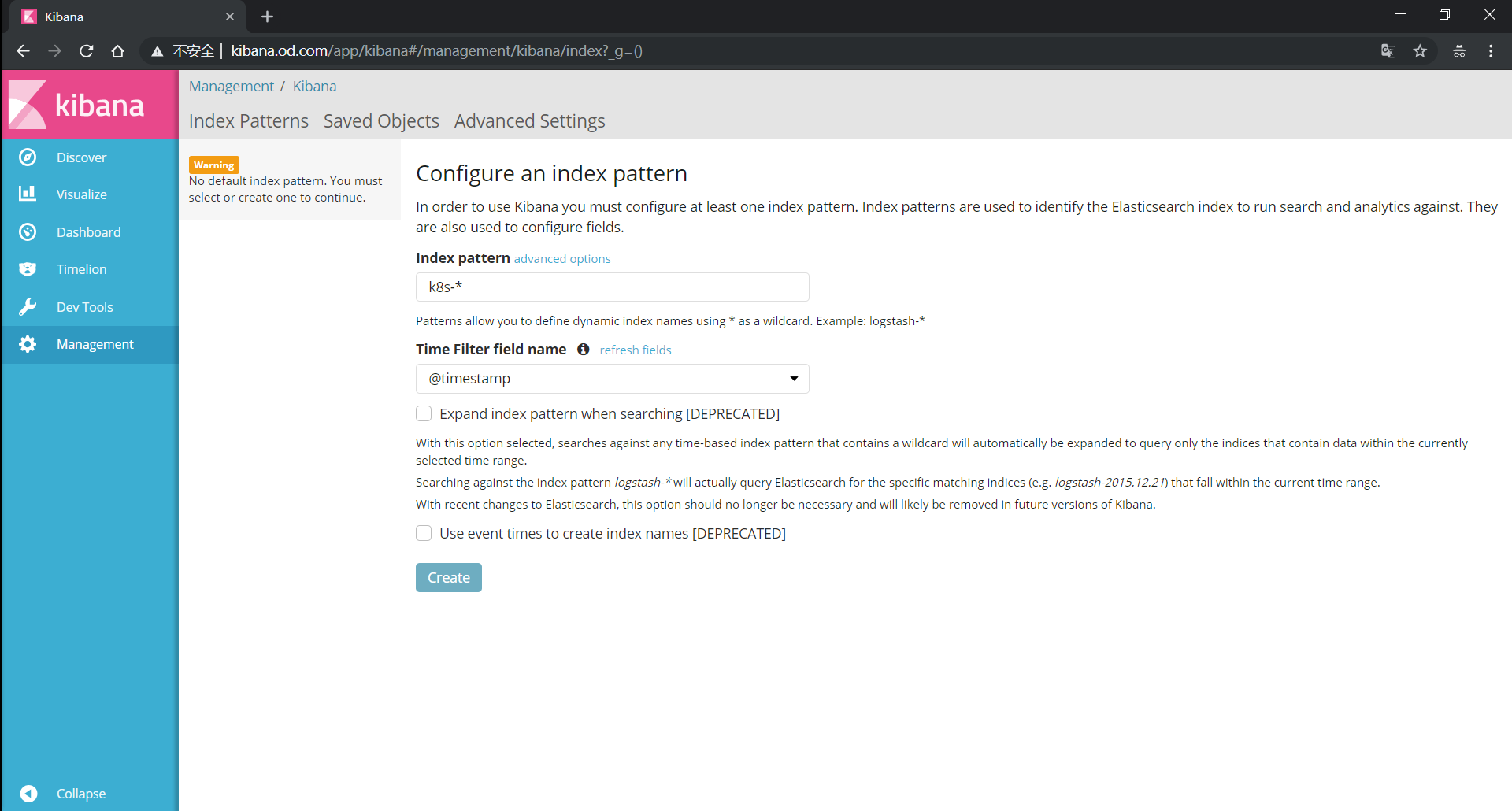

浏览器访问

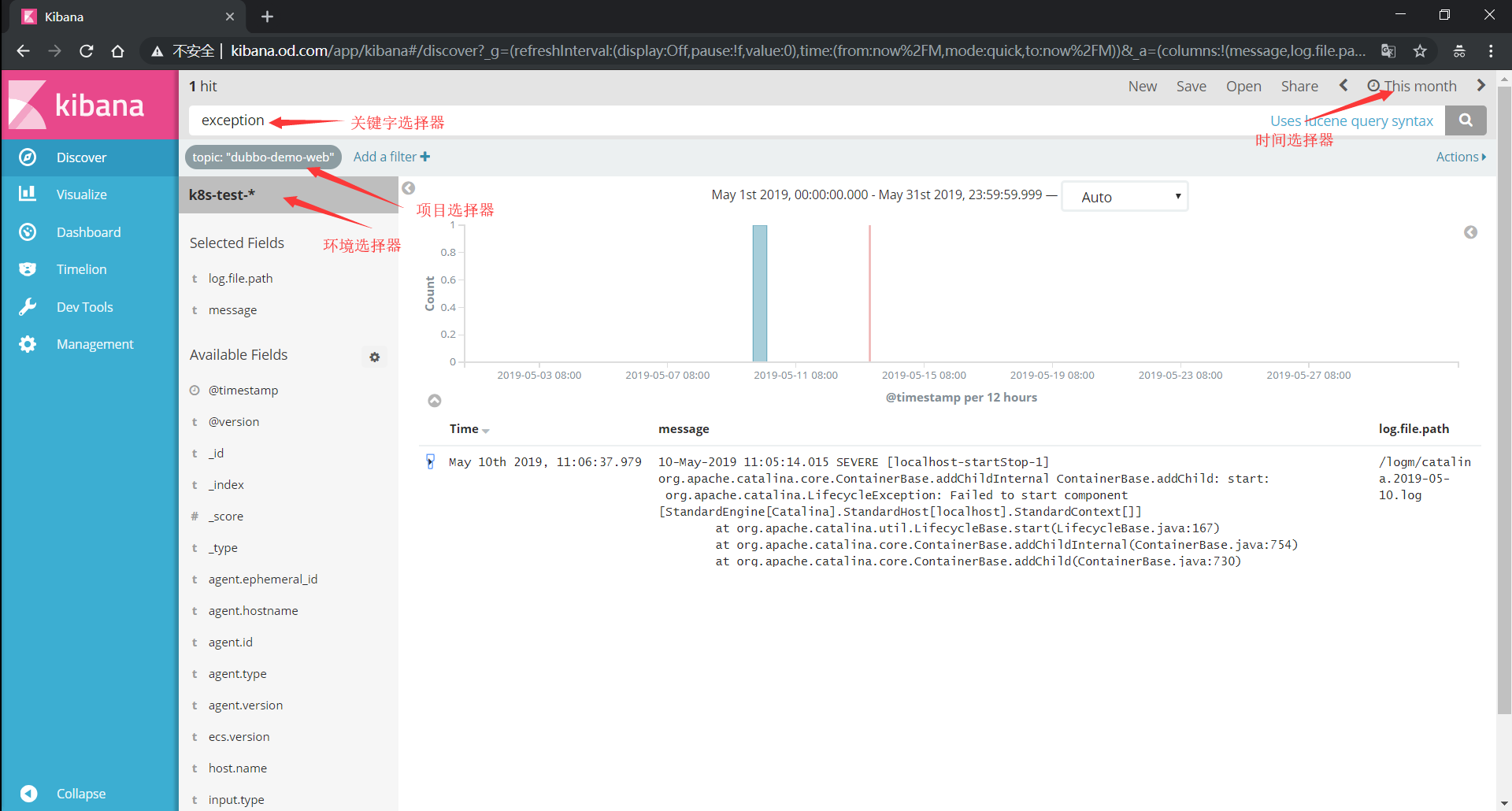

kibana的使用

选择区域

@timestamp

对应日志的时间戳

log.file.path

对应日志文件名

message

对应日志内容

时间选择器

- 选择日志时间

快速时间

绝对时间

相对时间

环境选择器

- 选择对应环境的日志

k8s-test-*

k8s-prod-*

项目选择器

- 对应filebeat的PROJ_NAME值

- Add a fillter

- topic is ${PROJ_NAME}

dubbo-demo-service

dubbo-demo-web

关键字选择器

- exception

- error